Imperfect AI is Fun AI

Welcome back, intrepid pilots and nostalgia seekers. Today we have a deep dive into the world of NPC AI. But first allow me to tell you a story about the first AI behavior I ever wrote.

We have to go way back to a time so shrouded in nerdiness and embarassment that nobody in their right mind would ever revisit it. Computer camp.

Yes, 13 year old me learned to program at a week long camp surrounded by other uber nerds whose collective abilities quickly showed me I was not the smartest one in the room. Where I learned that you could actually be tired from just thinking all day. Where I learned that losing in Risk really sucks.

Our first task was to create a tic-tac-toe game in C++ (I really wish Python existed back then), and then create an AI player who would never lose. It was a monstrous if-else disaster of listing every possible case of what the AI should do. Eventually I got it working and it would never lose. It was a “perfect” AI player.

Soon I discovered that the game was no longer fun to play. Something about that stuck with me; a fun opponent is imperfect. If you’ve ever said “the AI is cheating” in a game, you know what I mean.

So the goal is to make AI that is human-like, instead of god-like. Well, let’s start with Behavior Trees.

Behavior Trees

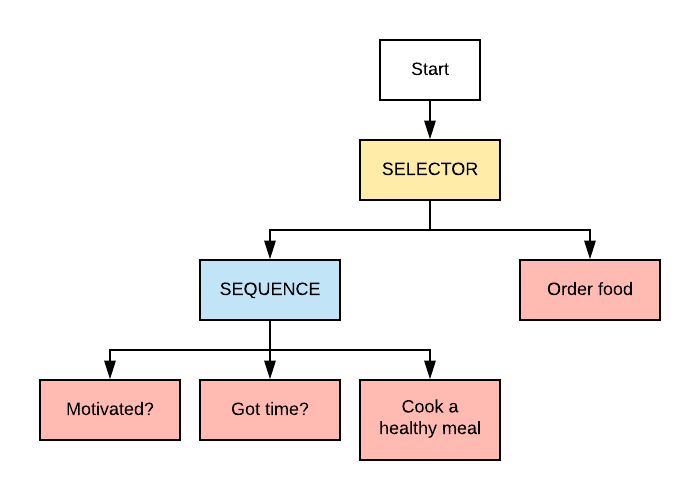

Behavior Trees are basically a flow chart of decisions and actions. For example:

My first take on AI in Cleared Hot was pretty simple: If you see an enemy, shoot them. If you have a destination, walk towards it. If you were a friendly unit and the helo landed near you, get in. It was governed by a Behavior Tree.

Sounds pretty simple, right? Well here is what the behavior tree looked for this simple behavior.

The real culprit was this: Combining State and Actions in one tree. For the non-programmers, state is something you have to keep track of about the world, that will determine what you do. For example: Am I currently attacking, am in the helo or not, etc. So every time the tree was executed, you would have to dive into the right sub-tree for that state.

Behavior Trees inside State Machines

After experiencing enough pain with the behavior tree approach, I stumbled upon a GDC video. In this talk, Bobby Anguelov mentions an approach that uses State Machines for… state, and Behavior Trees for actions. This is based off his experience creating AI for the Hitman series! Pretty cool.

This approach made so much sense to me that I figured it was worth implementing before I added anything new to the NPCs. So I build a simple version. The NPCs have a small set of states:

- Idle

- Attack

- Flee

- Hit

- Dead

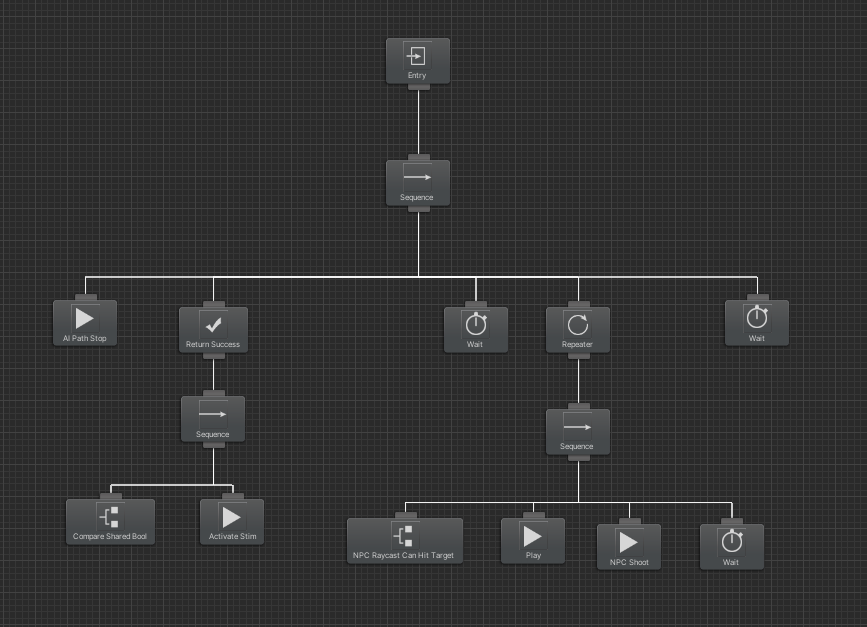

Each state contains it’s own behavior tree, that is set up when entering that state, and cleaned up when leaving. These behavior trees are just actions. They aren’t making decisions and they aren’t running any sensors. They are just executing actions. This cleans things up a TON. Look at the attacking behavior tree, which is currently the most complex one:

The really cool thing about this is that you can update your knowledge separate from running the behavior. Previously the NPCs were doing raycasts everytime they executed the behavior tree, but now they only need to update their knowledge base at the speed of a human reaction, and yet they can drive their behavior trees much faster.

Perception and Knowledge

In order to make decisions, the state machine needs some knowledge of the outside world. This is done by implementing “sensor” scripts that allow the NPC to see or hear things in the world. Each NPC updates their knowledge base about every 200-250ms (typical human reaction time) and will change state if needed.

These sensors react to “Stims” or AI Stimulations, which are just things you can drop in the world for an action that you want the NPCs to know about. So far I’m using these for: shots being fired, shots impacting a surface, explosions, all NPC and player positions, etc.

A trail of minigun bullet impacts create “Stims” in the world that NPCs can see and hear.

The game also adds some randomness into their update timing, so it tends to spread the processing time across frames, making the game run smoother. I’ve yet to fully stress test this, but I’ve put about 50 NPCs in view at once with no problems.

My favorite example of new behavior is the “RPG Warning”. When an enemy is about to fire an RPG (or other high damage weapon), they create a “RPG about to fire” Stim. If another NPC can see that, they will play an RPG warning voice line (RPG!!!) which also creates an audio stim. If another NPC sees the RPG enemy but already heard someone else yell the warning, they won’t repeat it.

All of this makes it possible for the NPCs to react to things in the world. Like being shot at.

Thank You

If you read this far, you need to be subscribed to my email list! https://clearedhotgame.com/email-list/

This is where I will release the first play test builds! I’ve already been interacting w some of you over email. Thanks for your thoughts and feedback, your emails are super motivating.